A bitter lesson of the past month is that viruses move fast. The good news of the deployment of the first COVID-19 vaccines was accompanied by the bad news that a new variant of the virus had emerged, quickening the disease’s spread and pushing up the number of patients treated in hospital.

Faced with an evolving virus, the UK government must intervene swiftly, even when evidence is limited. But the urgency of the situation should not stop us from using smart evaluation strategies to assess the effectiveness of the measures taken.

Right now, the UK is repeatedly missing opportunities to gain quality evidence to help us manage the pandemic. Smart, focused evaluations let us rapidly assess drugs, treatments and defences and learn what works best. They can also be done on the go while control actions are rolled out. This makes us faster in the long run, not slower.

Three opportunities spring to mind: vaccines, schools and contact tracing.

Vaccine strategy

The Joint Committee on Vaccination and Immunisation has advised delaying the second dose of the Pfizer/BioNTech vaccine from the recommended three weeks to 12 weeks, in order to get more people vaccinated with their first dose.

Without questioning the public health reasoning behind this decision, which is based on supply shortage, is is still worth quantifying the effect of extending the dose interval.

This is particularly pertinent for the elderly population who have been prioritised for vaccination, as a recent small real-world study demonstrated a substantial diversity of immune response three weeks after the first dose among people aged over 80, with seven out of 15 exhibiting a weak antibody response, which subsequently increased three weeks after the second dose. This may suggest that a shorter period between doses would be better for some elderly people, but we can’t know without a robust evaluation.

A solid evaluation of the longer dose interval on vaccine effectiveness with regard to COVID-19 diagnosis can be done cheaply and efficiently while rolling out the vaccination programme, by giving a relatively small fraction of people the second dose after three weeks. As most people would still be assigned to the 12-week interval, this experimental design would still achieve the aim of large coverage with the first dose but, in just a few weeks, we would have solid statistical evidence on the comparative effectiveness of the dose intervals.

We should seize the opportunity to provide the UK and the world with invaluable knowledge on how to plan optimal rollout strategies for the first mRNA vaccine ever to be approved.

COVID in schools

Before they were closed in the latest lockdown, the strategy in schools in England was to keep track of the spread of coronavirus by testing close contacts of confirmed cases every day for seven days using rapid tests, and only sending home those who tested positive.

Along with some fellow statisticians, I have argued that while this might seem an attractive plan, it is possible that the limited accuracy of some of the rapid tests could render the exercise less effective than claimed – or even pointless.

In preparation for schools reopening, what was and still is needed is a well-designed comparison of any change of policy relying on rapid tests compared to the current one of sending all contacts home.

A group of volunteering schools would be randomly allocated to different testing strategies. These could include pooled testing – in which samples are grouped and tested together – or a combination of PCR tests – which detect the virus’s genetic material – and rapid tests – which give results in 15 minutes but are less accurate. The prime outcomes of interest would be the number of confirmed cases over a set period as well as average days of school lost per pupil.

With more than 30,000 schools in the UK, 152 local education authorities and four national systems, such comparative evaluation study could get underway readily, so we could quickly learn what works, rather than guessing. This would give parents and students some much-needed certainty after a very disruptive year.

Contact tracing

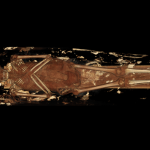

Despite the government spending a vast amount on the test, trace and isolate scheme, it is extraordinary that we were only recently given some clues on its effectiveness in stopping chains of transmission, using linkage between a subset of cases and their contacts who had sought testing. In this subset, about 30% of self-isolating contacts of a confirmed case who sought a PCR test were found positive, with half having symptoms.

Besides linkage, we need targeted studies embedded in the test and trace system. A random sample of households or close contacts of positive cases would be selected and visited on a random day during the isolation period with offer of swabs plus a small questionnaire about symptoms, occupation and demography. This would give us solid evidence on chance, timing and factors influencing onward transmission to household members or close contacts, as well as measuring compliance with “stay at home” recommendations.

The large programme of asymptomatic testing that was announced on January 10 ought to be accompanied by carefully designed evaluations about its effectiveness in specific contexts of use. How might the gain of detecting and isolating asymptomatic cases balance with more risky behaviour of people testing negative, including falsely, which might increase transmission? We need to go and find out.

A record of success

Politicians and scientists prefer to deal with certainties, but in this pandemic, evaluation is the key to a rapid response in an uncertain situation.

The UK has a stellar record in randomised trials, starting back in 1948 with testing the use of streptomycin for treating tuberculosis. A shining recent example is the large-scale Recovery trial, which, in less than four months, led to conclusive evidence on improved treatment of severe COVID-19 patients.

Recovery used an innovative design adapted to the urgency of the pandemic threat; new treatments could be added, and useless ones dropped quickly. Designs like these save lives by getting to a conclusive answer quicker, and can be embedded in the rollouts of new systems: this is the statistician’s craft.

Evaluation isn’t necessarily slow, arcane or backward looking. Done right, there is much to gain by adding smart evaluations to our pandemic control toolkit. Statisticians around the country are ready to engage with scientists and the government to make this happen

Sylvia Richardson receives funding from the Medical Research Council. She is President of the Royal Statistical Society.