robzs/Shutterstock

The government is planning to change the law so that social media companies like Facebook and Twitter will have no choice but to take responsibility for the safety of their users.

Plans to impose a duty of care on online services

mean companies will have to determine if content poses “a reasonably foreseeable risk of a significant adverse physical or psychological impact” to all of their users.

Failure to comply with the new duty of care standard could lead to penalties up to £18 million or 10% of global annual turnover and access to their services being blocked in the UK.

The UK government has released its final response to the public’s input on the online harms white paper it published in April 2019 in anticipation of an “online safety bill” scheduled to be introduced in 2021.

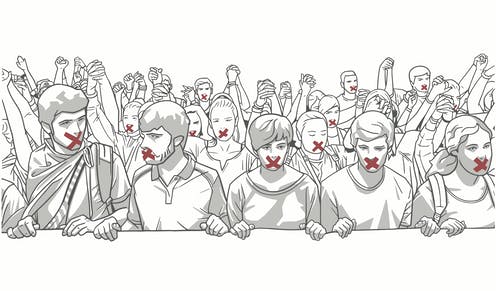

While well intentioned, the government’s proposals lack clear instructions to guide regulators and social media companies. In failing to do so, the government has created a threat to freedom of expression in the process.

An inconsistent track record

Under the proposals, companies will be required to take action to limit the spread of harmful content, proportionate to their severity and scale.

Currently, social media companies are only required to remove user-generated content hosted on their services under very specific circumstances (if the content is illegal, for example). Usually, they are free to decide which content should be limited or prohibited. They only need to adhere to their own community standards, with sometimes mixed [results].

PK Studio/Shutterstock

As Facebook reported in its community standards enforcement report, for the first quarter of 2020 it only found and flagged 15.6% of “bullying and harrassment” content before users reported it. Conversely, the company preemptively detected 99% of all “violent and graphic” content before it was reported by users. This disparity in detection rates indicates that the processes used to identify “harmful” content work when criteria are clear and well-defined but – as the 15.6% figure shows – fails where interpretation and context come into play.

Read more:

Self-harm and social media: a knee-jerk ban on content could actually harm young people

Social media companies have been criticised for inconsistently enforcing prohibitions on hate speech and sexist content. Because they only need to justify decisions to leave or remove legal but potentially harmful content based on their own community standards, they are not at risk of legal repercussions. If it’s unclear whether a piece of content violates rules, it is the company’s choice whether to remove it or leave it up. However, risk appraisals under the regulatory framework set out in the government’s proposals could be very different.

Lack of evidence

In both the white paper and the full response, the government provides insufficient information on the impact of the harms it seeks to limit. For instance, the white paper states that one in five children aged 11-19 reported experiencing cyberbullying in 2017, but does not demonstrate how (and how much) those children were affected. The assumption is simply made that the types of content in scope are harmful with little justification as to why, or to what extent, their regulation warrants limiting free speech.

Lightspring/Shutterstock

As Facebook’s record shows, it can be difficult to interpret the meaning and potential impact of content in instances where subjectivity is involved. When it comes to assessing the harmful effects of online content, ambiguity is the rule, not the exception.

Despite the growing base of academic research on online harms, few straightforward claims can be made about the associations between different types of content and the experience of harm.

For example, there is evidence that pro-eating disorder content can be harmful to certain vulnerable people but doesn’t impact most of the general population. On the other hand, such content may also act as a means of support for individuals struggling with eating disorders. Understanding that such content is both risky for some and helpful to others, should it be limited? If so, how much, and for whom?

The lack of available and rigorous evidence leaves social media companies and regulators without points of reference to evaluate the potential dangers of user-generated content. Left to their own devices, social media companies may set the standards that will best serve their own interests.

Consequences for free speech

Social media companies already fail to consistently enforce their own community standards. Under the UK government’s proposals, they would have to uphold a vaguely defined duty of care without adequate explanation of how to do so. In the absence of practical guidance for upholding that duty, they may simply continue to choose the path of least resistance by over zealously blocking questionable content.

The government’s proposals do not adequately demonstrate that the harms presented warrant severe potential limitations of free speech. In order to ensure that the online safety bill does not result in those unjustified restrictions, clearer guidance on the evaluation of online harms must be provided to regulators and the social media services concerned.

![]()

Claudine Tinsman has received funding from the Information Commissioner's Office as part of the 'Informing the Future of Data Protection by Design and by Default in Smart Homes' project.