Shutterstock/MartaDM

It’s widely believed that social media conspiracy theories are driven by malicious and anonymous “bots” set up by shadowy third parties. But my new research – which examined an extremely successful COVID-19 conspiracy theory – has shown that ordinary citizen accounts can be just as culpable when it comes to spreading dangerous lies and misinformation.

The pandemic has fuelled at least ten conspiracy theories this year. Some linked the spread of the disease to the 5G network, leading to phone masts being vandalised. Others argued that COVID-19 was a biological weapon. Research has shown that conspiracy theories could contribute to people ignoring social distancing rules.

The #FilmYourHospital movement was one such theory. It encouraged people to record videos of themselves in seemingly empty, or less-than-crowded, hospitals to prove the pandemic is a hoax. Many videos showing empty corridors and wards were shared.

Our research sought to identify the drivers of the conspiracy and examine whether the accounts that propelled it in April 2020 were bots or real people.

Scale of the conspiracy

The 5G conspiracy attracted 6,556 Twitter users over the course of a single week. The #FilmYourHospital conspiracy was much larger than 5G, with a total of 22,785 tweets sent over a seven day period by 11,333 users. It also had strong international backing.

Wasim Ahmed, Author provided

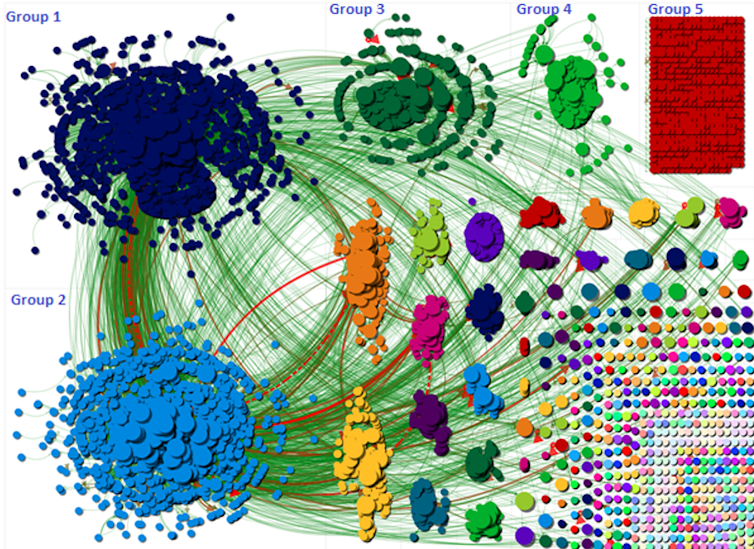

The visualisation above shows each Twitter user as a small circle and the overall discussion is clustered into a number of different groups. These groups are formed based on how users were mentioning and re-tweeting each other.

The visualisation highlights how the three largest groups were responsible for spreading the conspiracy the furthest. For instance, the discussion in groups one and two was centred around a single tweet that was highly re-tweeted. The tweet suggested the public were being misled and that hospitals were not busy or overrun – as had been reported by the mainstream media. The tweet then requested other users to film their hospitals using the hashtag so that it could become a trending topic. The graphic shows the reach and size of these groups.

Where are the bots?

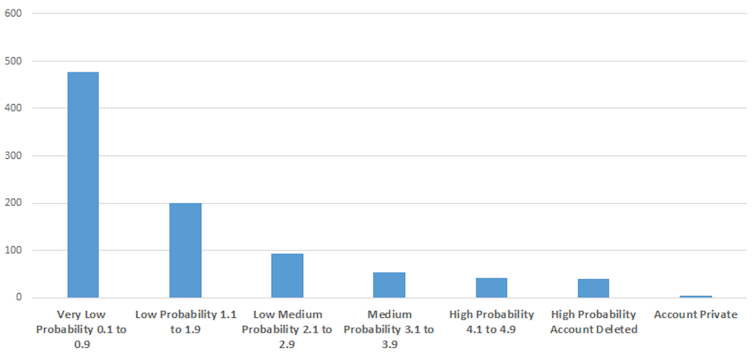

We used Botometer to detect bots that draw on a machine learning algorithm. The tool calculates a score where low scores indicate human behaviour and a high score indicates a bot. Botometer works by extracting various features from an account such as its profile, friends, social network, patterns in temporal activity, language and sentiment. Our study took a 10% systematic representative sample of users to run through Botometer.

Our results indicated that the rate of automated accounts was likely to be low. We used the raw scores from Botometer to attach a probability label of whether the account was likely to be a bot. These ranged from very low, low, low-medium and high probability.

At best, only 9.2% of the sample that we looked at resembled highly suspicious account behaviour or bots. That means over 90% of accounts we examined were probably genuine.

Wasim Ahmed, Author provided

Interestingly, we also found that deleted accounts and automated accounts contained keywords such as “Trump” and “Make America Great Again” in their user-bios. Around the same time President Donald Trump had been in disagreement with scientific advisers on when to lift lockdown rules.

Where did it come from?

When we examined the most influential users connected to the hashtag we found that the conspiracy theory was driven by influential conservative politicians as well as far-right political activists. Scholars have noted how the far right has been exploiting the pandemic. For example, some of have set up channels on Telegram, a cloud-based instant messaging service, to discuss COVID-19 and have amplified disinformation.

But once the conspiracy theory began to generate attention it was sustained by ordinary citizens. The campaign also appeared to be supported and driven by pro-Trump Twitter accounts and our research found that some accounts that behaved like “bots” and deleted accounts tended to be pro-Trump. It is important to note that not all accounts that behave like bots are bots, as there might be users who are highly active who could receive a high score. And, conversely, not all bots are harmful as some have been set up for legitimate purposes.

Twitter users frequently shared YouTube videos in support of the theory and YouTube was an influential source.

Can they be stopped?

Social media organisations can monitor for suspicious accounts and content and if they violate the terms of service, the content should be removed quickly. Twitter experimented with attaching warning labels on tweets. This was initially unsuccessful because Twitter accidentally mislabelled some tweets, which might have inadvertently pushed conspiracies further. But if they manage to put together a better labelling technique this could be an effective method.

Conspiracies can also be countered by providing trustworthy information, delivered from public health authorities as well as popular culture “influencers”. For instance, Oldham City Council in the UK, enlisted the help of actor James Buckley – famous for his role as Jay in the E4 sitcom The Inbetweeners – to spread public health messages.

And other research highlights that explaining flawed arguments and describing scientific consensus may help reduce the effect of misinformation.. Sadly, no matter what procedures and steps are put in place, there will always be people who will believe in conspiracies. The onus must be on the platforms to make sure these theories are not so easily spread.

![]()

Wasim Ahmed does not work for, consult, own shares in or receive funding from any company or organisation that would benefit from this article, and has disclosed no relevant affiliations beyond their academic appointment.