Grzegorz Czapski/Shutterstock

Elon Musk thinks his company Tesla will have fully autonomous cars ready by the end of 2020. “There are no fundamental challenges remaining,” he said recently. “There are many small problems. And then there’s the challenge of solving all those small problems and putting the whole system together.”

While the technology to enable a car to complete a journey without human input (what the industry calls “level 5 autonomy”) might be advancing rapidly, producing a vehicle that can do so safely and legally is another matter.

Read more:

Are self-driving cars safe? Expert on how we will drive in the future

There are indeed still fundamental challenges to the safe introduction of fully autonomous cars, and we have to overcome them before we see these vehicles on our roads. Here are five of the biggest remaining obstacles.

1. Sensors

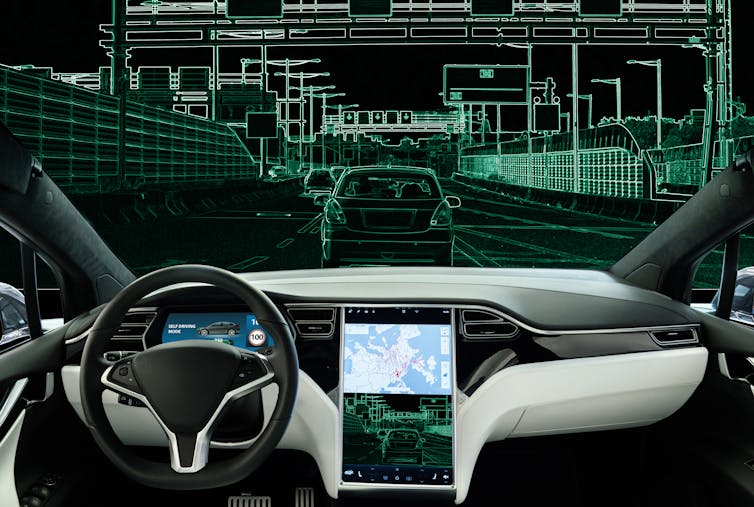

Autonomous cars use a broad set of sensors to “see” the environment around them, helping to detect objects such as pedestrians, other vehicles and road signs. Cameras help the car to view objects. Lidar uses lasers to measure the distance between objects and the vehicle. Radar detects objects and tracks their speed and direction.

These sensors all feed data back to the car’s control system or computer to help it make decisions about where to steer or when to brake. A fully autonomous car needs a set of sensors that accurately detect objects, distance, speed and so on under all conditions and environments, without a human needing to intervene.

Lousy weather, heavy traffic, roads signs with graffiti on them can all negatively impact the accuracy of sensing capability. Radar, which Tesla uses, is less susceptible to adverse weather conditions, but challenges remain in ensuring that the chosen sensors used in a fully autonomous car can detect all objects with the required level of certainty for them to be safe.

To enable truly autonomous cars, these sensors have to work in all weather conditions anywhere on the planet, from Alaska to Zanzibar and in congested cities such as Cairo and Hanoi. Accidents with Tesla’s current (only level 2) “autopilot”, including one in July 2020 hitting parked vehicles, show the company has a big gap to overcome to produce such a global, all-weather capability.

2. Machine learning

Most autonomous vehicles will use artificial intelligence and machine learning to process the data that comes from its sensors and to help make the decisions about its next actions. These algorithms will help identify the objects detected by the sensors and classify them, according to the system’s training, as a pedestrian, a street light, and so on. The car will then use this information to help decide whether the car needs to take action, such as braking or swerving, to avoid a detected object.

In the future, machines will be able to do this detection and classification more efficiently than a human driver can. But at the moment there is no widely accepted and agreed basis for ensuring that the machine learning algorithms used in the cars are safe. We do not have agreement across the industry, or across standardisation bodies, on how machine learning should be trained, tested or validated.

Scharfsinn/Shutterstock

3. The open road

Once an autonomous car is on the road it will continue to learn. It will drive on new roads, detect objects it hasn’t come across in its training, and be subject to software updates.

How can we ensure that the system continues to be just as safe as its previous version? We need to be able to show that any new learning is safe and that the system doesn’t forget previously safe behaviours, something the industry has yet to reach agreement on.

4. Regulation

Sufficient standards and regulations for a whole autonomous system do not exist – in any industry. Current standards for the safety of existing vehicles assume the presence of a human driver to take over in an emergency.

For self-driving cars, there are emerging regulations for particular functions, such as for automated lane keeping systems. There is also an international standard for autonomous systems that includes autonomous vehicles, which sets relevant requirements but does not solve the problems of sensors, machine learning and operational learning introduced above – although it may in time.

Without recognised regulations and standards, no self-driving car, whether considered to be safe or not, will make it on to the open road.

5. Social acceptability

There have been numerous high-profile accidents involving Tesla’s current automated cars, as well as with other automated and autonomous vehicles. Social acceptability is not just an issue for those wishing to buy a self-driving car, but also for others sharing the road with them.

The public needs to be involved in decisions about the introduction and adoption of self-driving vehicles. Without this, we risk the rejection of this technology.

The first three of these challenges must be solved to help us overcome the latter two. There is, of course, a race to be the first company to introduce a fully self-driving car. But without collaboration on how we make the car safe, provide evidence of that safety, and work with regulators and the public to get a “stamp of approval” these cars will remain on the test track for years to come.

Unpalatable as it may be to entrepreneurs such as Musk, the road to getting autonomous vehicles approved is through lengthy collaboration on these hard problems around safety, assurance, regulation and acceptance.

![]()

John McDermid consults to/has share options in FiveAI who are developing technology for autonomous vehicles (but are nor producing vehicles and so do not compete with Tesla). He receives funding from Lloyd's Register Foundation for work on assurance of robotics and autonomous systems; the Foundation is a charity and does not manufacture or sell autonomous vehicles.